Pessimism of the Intellect, Optimism of the Will Favorite posts | Manifold podcast | Twitter: @hsu_steve

Sunday, March 25, 2018

Outlier selection via noisy genomic predictors

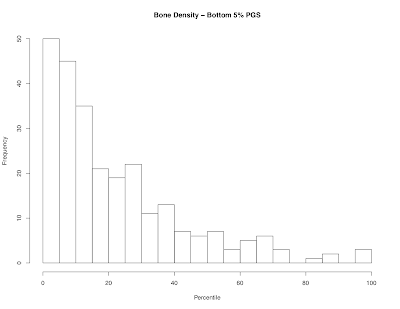

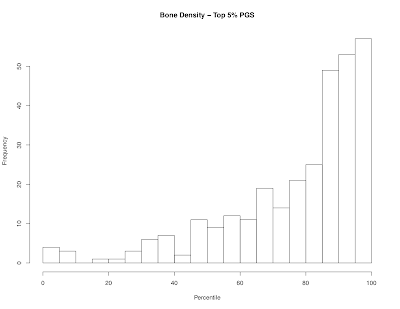

We recently used machine learning techniques to build polygenic predictors for a number of complex traits. One of these traits is bone density, for which the predictor correlates r ≈ 0.45 with actual bone density. This is far from perfect, but good enough to identify outliers, as illustrated above.

The figures above show the actual bone density distribution of individuals who are in the top or bottom 5 percent for predictor score. You can see that people with low/high scores are overwhelmingly likely to be below/above average on the phenotype, with a good chance of being in the extreme left/right tail of the distribution.

If, for example, very low bone density elevates likelihood of osteoporosis or fragile bones, then individuals with low polygenic score would have increased risk for those medical conditions and should receive extra care and additional monitoring as they age.

Similarly, if one had a cognitive ability predictor with r ≈ 0.45, the polygenic score would allow the identification of individuals likely to be well below or above average in ability.

I predict this will be the case relatively soon. Much sooner than most people think ;-)

Here is a recent talk I gave at MSU: Genomic Prediction of Complex Traits

Saturday, March 24, 2018

Public Troubled by Deep State (Monmouth Poll)

If you use the term Deep State in the current political climate you are liable to be declared a right wing conspiracy nut. But it was Senator Chuck Schumer who warned Trump (on Rachel Maddow's show) that

If this is not a Deep State, then what is?

“Let me tell you, you take on the intelligence community, they have six ways from Sunday at getting back at you,”In 2014 it was Senator Dianne Feinstein who accused the CIA (correctly, it turns out) of spying on Congressional staffers working for the Intelligence Committee. Anyone who is paying attention now knows that the Obama FBI/DOJ used massive government surveillance powers against the Trump team during and after the election. (Title 1 FISA warrant granted against Carter Page allowed queries against intercepted and stored communications with prior associates, including US citizens...) Had Trump lost the election none of this would have ever come to light.

If this is not a Deep State, then what is?

Monmouth University: A majority of the American public believe that the U.S. government engages in widespread monitoring of its own citizens and worry that the U.S. government could be invading their own privacy. The Monmouth University Poll also finds a large bipartisan majority who feel that national policy is being manipulated or directed by a “Deep State” of unelected government officials. Americans of color on the center and left and NRA members on the right are among those most worried about the reach of government prying into average citizens’ lives.

Just over half of the public is either very worried (23%) or somewhat worried (30%) about the U.S. government monitoring their activities and invading their privacy. There are no significant partisan differences – 57% of independents, 51% of Republicans, and 50% of Democrats are at least somewhat worried the federal government is monitoring their activities. Another 24% of the American public are not too worried and 22% are not at all worried.

Fully 8-in-10 believe that the U.S. government currently monitors or spies on the activities of American citizens, including a majority (53%) who say this activity is widespread and another 29% who say such monitoring happens but is not widespread. Just 14% say this monitoring does not happen at all. There are no substantial partisan differences in these results.

“This is a worrisome finding. The strength of our government relies on public faith in protecting our freedoms, which is not particularly robust. And it’s not a Democratic or Republican issue. These concerns span the political spectrum,” said Patrick Murray, director of the independent Monmouth University Polling Institute.

Few Americans (18%) say government monitoring or spying on U.S. citizens is usually justified, with most (53%) saying it is only sometimes justified. Another 28% say this activity is rarely or never justified. Democrats (30%) and independents (31%) are somewhat more likely than Republicans (21%) to say government monitoring of U.S. citizens is rarely or never justified.

Turning to the Washington political infrastructure as a whole, 6-in-10 Americans (60%) feel that unelected or appointed government officials have too much influence in determining federal policy. Just 26% say the right balance of power exists between elected and unelected officials in determining policy. Democrats (59%), Republicans (59%) and independents (62%) agree that appointed officials hold too much sway in the federal government.

“We usually expect opinions on the operation of government to shift depending on which party is in charge. But there’s an ominous feeling by Democrats and Republicans alike that a ‘Deep State’ of unelected operatives are pulling the levers of power,” said Murray.

Few Americans (13%) are very familiar with the term “Deep State;” another 24% are somewhat familiar, while 63% say they are not familiar with this term. However, when the term is described as a group of unelected government and military officials who secretly manipulate or direct national policy, nearly 3-in-4 (74%) say they believe this type of apparatus exists in Washington. This includes 27% who say it definitely exists and 47% who say it probably exists. Only 1-in-5 say it does not exist (16% probably not and 5% definitely not). Belief in the probable existence of a Deep State comes from more than 7-in-10 Americans in each partisan group, although Republicans (31%) and independents (33%) are somewhat more likely than Democrats (19%) to say that the Deep State definitely exists.

Friday, March 23, 2018

Genetics and Group Differences: David Reich (Harvard) in NYTimes

Harvard geneticist David Reich writes below in the New York Times. The prospect that human ancestry clusters ("races") might differ in allele frequencies, leading to quantifiable group differences, has been looming for a long time. Reich writes

This is a recent Reich lecture with the same title as his forthcoming book.

I am worried that well-meaning people who deny the possibility of substantial biological differences among human populations are digging themselves into an indefensible position.See Metric on the space of genomes (2007), Human genetic variation and Lewontin's fallacy in pictures (2008), and What's New Since Montagu? (2014).

How Genetics Is Changing Our Understanding of ‘Race’

By David Reich

March 23, 2018

In 1942, the anthropologist Ashley Montagu published “Man’s Most Dangerous Myth: The Fallacy of Race,” an influential book that argued that race is a social concept with no genetic basis. A classic example often cited is the inconsistent definition of “black.” In the United States, historically, a person is “black” if he has any sub-Saharan African ancestry; in Brazil, a person is not “black” if he is known to have any European ancestry. If “black” refers to different people in different contexts, how can there be any genetic basis to it?

Beginning in 1972, genetic findings began to be incorporated into this argument. That year, the geneticist Richard Lewontin published an important study of variation in protein types in blood. He grouped the human populations he analyzed into seven “races” — West Eurasians, Africans, East Asians, South Asians, Native Americans, Oceanians and Australians — and found that around 85 percent of variation in the protein types could be accounted for by variation within populations and “races,” and only 15 percent by variation across them. To the extent that there was variation among humans, he concluded, most of it was because of “differences between individuals.”

In this way, a consensus was established that among human populations there are no differences large enough to support the concept of “biological race.” Instead, it was argued, race is a “social construct,” a way of categorizing people that changes over time and across countries.

It is true that race is a social construct. It is also true, as Dr. Lewontin wrote, that human populations “are remarkably similar to each other” from a genetic point of view.

But over the years this consensus has morphed, seemingly without questioning, into an orthodoxy. The orthodoxy maintains that the average genetic differences among people grouped according to today’s racial terms are so trivial when it comes to any meaningful biological traits that those differences can be ignored.

The orthodoxy goes further, holding that we should be anxious about any research into genetic differences among populations. The concern is that such research, no matter how well-intentioned, is located on a slippery slope that leads to the kinds of pseudoscientific arguments about biological difference that were used in the past to try to justify the slave trade, the eugenics movement and the Nazis’ murder of six million Jews.

I have deep sympathy for the concern that genetic discoveries could be misused to justify racism. But as a geneticist I also know that it is simply no longer possible to ignore average genetic differences among “races.”

Groundbreaking advances in DNA sequencing technology have been made over the last two decades. These advances enable us to measure with exquisite accuracy what fraction of an individual’s genetic ancestry traces back to, say, West Africa 500 years ago — before the mixing in the Americas of the West African and European gene pools that were almost completely isolated for the last 70,000 years. With the help of these tools, we are learning that while race may be a social construct, differences in genetic ancestry that happen to correlate to many of today’s racial constructs are real.

Recent genetic studies have demonstrated differences across populations not just in the genetic determinants of simple traits such as skin color, but also in more complex traits like bodily dimensions and susceptibility to diseases. For example, we now know that genetic factors help explain why northern Europeans are taller on average than southern Europeans, why multiple sclerosis is more common in European-Americans than in African-Americans, and why the reverse is true for end-stage kidney disease.

I am worried that well-meaning people who deny the possibility of substantial biological differences among human populations are digging themselves into an indefensible position, one that will not survive the onslaught of science. I am also worried that whatever discoveries are made — and we truly have no idea yet what they will be — will be cited as “scientific proof” that racist prejudices and agendas have been correct all along, and that those well-meaning people will not understand the science well enough to push back against these claims.

This is why it is important, even urgent, that we develop a candid and scientifically up-to-date way of discussing any such differences, instead of sticking our heads in the sand and being caught unprepared when they are found.

To get a sense of what modern genetic research into average biological differences across populations looks like, consider an example from my own work. Beginning around 2003, I began exploring whether the population mixture that has occurred in the last few hundred years in the Americas could be leveraged to find risk factors for prostate cancer, a disease that occurs 1.7 times more often in self-identified African-Americans than in self-identified European-Americans. This disparity had not been possible to explain based on dietary and environmental differences, suggesting that genetic factors might play a role.

Self-identified African-Americans turn out to derive, on average, about 80 percent of their genetic ancestry from enslaved Africans brought to America between the 16th and 19th centuries. My colleagues and I searched, in 1,597 African-American men with prostate cancer, for locations in the genome where the fraction of genes contributed by West African ancestors was larger than it was elsewhere in the genome. In 2006, we found exactly what we were looking for: a location in the genome with about 2.8 percent more African ancestry than the average.

When we looked in more detail, we found that this region contained at least seven independent risk factors for prostate cancer, all more common in West Africans. Our findings could fully account for the higher rate of prostate cancer in African-Americans than in European-Americans. We could conclude this because African-Americans who happen to have entirely European ancestry in this small section of their genomes had about the same risk for prostate cancer as random Europeans.

Did this research rely on terms like “African-American” and “European-American” that are socially constructed, and did it label segments of the genome as being probably “West African” or “European” in origin? Yes. Did this research identify real risk factors for disease that differ in frequency across those populations, leading to discoveries with the potential to improve health and save lives? Yes.

While most people will agree that finding a genetic explanation for an elevated rate of disease is important, they often draw the line there. Finding genetic influences on a propensity for disease is one thing, they argue, but looking for such influences on behavior and cognition is another.

But whether we like it or not, that line has already been crossed. A recent study led by the economist Daniel Benjamin compiled information on the number of years of education from more than 400,000 people, almost all of whom were of European ancestry. After controlling for differences in socioeconomic background, he and his colleagues identified 74 genetic variations that are over-represented in genes known to be important in neurological development, each of which is incontrovertibly more common in Europeans with more years of education than in Europeans with fewer years of education.

It is not yet clear how these genetic variations operate. A follow-up study of Icelanders led by the geneticist Augustine Kong showed that these genetic variations also nudge people who carry them to delay having children. So these variations may be explaining longer times at school by affecting a behavior that has nothing to do with intelligence.

This study has been joined by others finding genetic predictors of behavior. One of these, led by the geneticist Danielle Posthuma, studied more than 70,000 people and found genetic variations in more than 20 genes that were predictive of performance on intelligence tests.

Is performance on an intelligence test or the number of years of school a person attends shaped by the way a person is brought up? Of course. But does it measure something having to do with some aspect of behavior or cognition? Almost certainly. And since all traits influenced by genetics are expected to differ across populations (because the frequencies of genetic variations are rarely exactly the same across populations), the genetic influences on behavior and cognition will differ across populations, too.

You will sometimes hear that any biological differences among populations are likely to be small, because humans have diverged too recently from common ancestors for substantial differences to have arisen under the pressure of natural selection. This is not true. The ancestors of East Asians, Europeans, West Africans and Australians were, until recently, almost completely isolated from one another for 40,000 years or longer, which is more than sufficient time for the forces of evolution to work. Indeed, the study led by Dr. Kong showed that in Iceland, there has been measurable genetic selection against the genetic variations that predict more years of education in that population just within the last century.

To understand why it is so dangerous for geneticists and anthropologists to simply repeat the old consensus about human population differences, consider what kinds of voices are filling the void that our silence is creating. Nicholas Wade, a longtime science journalist for The New York Times, rightly notes in his 2014 book, “A Troublesome Inheritance: Genes, Race and Human History,” that modern research is challenging our thinking about the nature of human population differences. But he goes on to make the unfounded and irresponsible claim that this research is suggesting that genetic factors explain traditional stereotypes.

One of Mr. Wade’s key sources, for example, is the anthropologist Henry Harpending, who has asserted that people of sub-Saharan African ancestry have no propensity to work when they don’t have to because, he claims, they did not go through the type of natural selection for hard work in the last thousands of years that some Eurasians did. There is simply no scientific evidence to support this statement. Indeed, as 139 geneticists (including myself) pointed out in a letter to The New York Times about Mr. Wade’s book, there is no genetic evidence to back up any of the racist stereotypes he promotes.

Another high-profile example is James Watson, the scientist who in 1953 co-discovered the structure of DNA, and who was forced to retire as head of the Cold Spring Harbor Laboratories in 2007 after he stated in an interview — without any scientific evidence — that research has suggested that genetic factors contribute to lower intelligence in Africans than in Europeans.

At a meeting a few years later, Dr. Watson said to me and my fellow geneticist Beth Shapiro something to the effect of “When are you guys going to figure out why it is that you Jews are so much smarter than everyone else?” He asserted that Jews were high achievers because of genetic advantages conferred by thousands of years of natural selection to be scholars, and that East Asian students tended to be conformist because of selection for conformity in ancient Chinese society. (Contacted recently, Dr. Watson denied having made these statements, maintaining that they do not represent his views; Dr. Shapiro said that her recollection matched mine.)

What makes Dr. Watson’s and Mr. Wade’s statements so insidious is that they start with the accurate observation that many academics are implausibly denying the possibility of average genetic differences among human populations, and then end with a claim — backed by no evidence — that they know what those differences are and that they correspond to racist stereotypes. They use the reluctance of the academic community to openly discuss these fraught issues to provide rhetorical cover for hateful ideas and old racist canards.

This is why knowledgeable scientists must speak out. If we abstain from laying out a rational framework for discussing differences among populations, we risk losing the trust of the public and we actively contribute to the distrust of expertise that is now so prevalent. We leave a vacuum that gets filled by pseudoscience, an outcome that is far worse than anything we could achieve by talking openly.

If scientists can be confident of anything, it is that whatever we currently believe about the genetic nature of differences among populations is most likely wrong. For example, my laboratory discovered in 2016, based on our sequencing of ancient human genomes, that “whites” are not derived from a population that existed from time immemorial, as some people believe. Instead, “whites” represent a mixture of four ancient populations that lived 10,000 years ago and were each as different from one another as Europeans and East Asians are today.

So how should we prepare for the likelihood that in the coming years, genetic studies will show that many traits are influenced by genetic variations, and that these traits will differ on average across human populations? It will be impossible — indeed, anti-scientific, foolish and absurd — to deny those differences.

For me, a natural response to the challenge is to learn from the example of the biological differences that exist between males and females. The differences between the sexes are far more profound than those that exist among human populations, reflecting more than 100 million years of evolution and adaptation. Males and females differ by huge tracts of genetic material — a Y chromosome that males have and that females don’t, and a second X chromosome that females have and males don’t.

Most everyone accepts that the biological differences between males and females are profound. In addition to anatomical differences, men and women exhibit average differences in size and physical strength. (There are also average differences in temperament and behavior, though there are important unresolved questions about the extent to which these differences are influenced by social expectations and upbringing.)

How do we accommodate the biological differences between men and women? I think the answer is obvious: We should both recognize that genetic differences between males and females exist and we should accord each sex the same freedoms and opportunities regardless of those differences.

It is clear from the inequities that persist between women and men in our society that fulfilling these aspirations in practice is a challenge. Yet conceptually it is straightforward. And if this is the case with men and women, then it is surely the case with whatever differences we may find among human populations, the great majority of which will be far less profound.

An abiding challenge for our civilization is to treat each human being as an individual and to empower all people, regardless of what hand they are dealt from the deck of life. Compared with the enormous differences that exist among individuals, differences among populations are on average many times smaller, so it should be only a modest challenge to accommodate a reality in which the average genetic contributions to human traits differ.

It is important to face whatever science will reveal without prejudging the outcome and with the confidence that we can be mature enough to handle any findings. Arguing that no substantial differences among human populations are possible will only invite the racist misuse of genetics that we wish to avoid.

David Reich is a professor of genetics at Harvard and the author of the forthcoming book “Who We Are and How We Got Here: Ancient DNA and the New Science of the Human Past,” from which this article is adapted.

This is a recent Reich lecture with the same title as his forthcoming book.

Thursday, March 22, 2018

The Automated Physicist: Experimental Particle Physics in the Era of AI

My office will be recording some of the most interesting of the many talks that happen at MSU. I will post some of my favorites here on the blog. See the MSU Research channel on YouTube for more! Audio for this video isn't great, but we are making improvements in our process / workflow for capturing these presentations.

In this video, high energy physicist Harrison B. Prosper (Florida State University) discusses the history of AI/ML, Deep Learning, and applications to LHC physics and beyond.

The Automated Physicist: Experimental Particle Physics in the Age of AI

Abstract: After a broad brush review of the history of machine learning (ML), followed by a brief introduction to the state-of-the-art, I discuss the goals of researchers at the Large Hadron Collider and how machine learning is being used to help reach those goals. Inspired by recent breakthroughs in artificial intelligence (AI), such as Google's AlphaGoZero, I end with speculative ideas about how the exponentially improving ML/AI technology may, or may not, be helpful in particle physics research over the next few decades.

Wednesday, March 21, 2018

The Face of the Deep State: John Brennan perjury

Just for fun, Google John Brennan perjury and follow the trail. Here is former CIA Director Brennan raging at President Trump:

Here is The Guardian, charging Brennan with lying about CIA spying on the Senate in 2014. What do Democrat Senators Feinstein and Wyden think of Brennan's credibility? No need to guess, just keep reading.When the full extent of your venality, moral turpitude, and political corruption becomes known, you will take your rightful place as a disgraced demagogue in the dustbin of history. You may scapegoat Andy McCabe, but you will not destroy America...America will triumph over you. https://t.co/uKppoDbduj— John O. Brennan (@JohnBrennan) March 17, 2018

Guardian: CIA director John Brennan lied to you and to the Senate. Fire him. (2014)Here is Brennan, under oath, claiming no knowledge of the origins of the Steele dossier or whether it was used in a FISA application -- May 23, 2017! Credible?

As reports emerged Thursday that an internal investigation by the Central Intelligence Agency’s inspector general found that the CIA “improperly” spied on US Senate staffers when researching the CIA’s dark history of torture, it was hard to conclude anything but the obvious: John Brennan blatantly lied to the American public. Again.

“The facts will come out,” Brennan told NBC News in March after Senator Dianne Feinstein issued a blistering condemnation of the CIA on the Senate floor, accusing his agency of hacking into the computers used by her intelligence committee’s staffers. “Let me assure you the CIA was in no way spying on [the committee] or the Senate,” he said.

After the CIA inspector general’s report completely contradicted Brennan’s statements, it now appears Brennan was forced to privately apologize to intelligence committee chairs in a “tense” meeting earlier this week. Other Senators on Thursday pushed for Brennan to publicly apologize and called for an independent investigation. Sen. Ron Wyden said it well:

Ron Wyden (@RonWyden)

@CIA broke into Senate computer files. Then tried to have Senate staff prosecuted. Absolutely unacceptable in a democracy.

July 31, 2014

See also How NSA Tracks You (Bill Binney).

Wednesday, March 14, 2018

Stephen Hawking (1942-2018)

Roger Penrose writes in the Guardian, providing a scientifically precise summary of Hawking's accomplishments as a physicist (worth reading in full at the link). Penrose and Hawking collaborated to produce important singularity theorems in general relativity in the late 1960s.

Here is a nice BBC feature: A Brief History of Stephen Hawking. The photo above was taken at Hawking's Oxford graduation in 1962.

Stephen Hawking – obituary by Roger PenroseNotwithstanding Hawking's premature 2004 capitulation to Preskill, information loss in black hole evaporation remains an open question in fundamental physics, nearly a half century after Hawking first recognized the problem in 1975. I read this paper as a graduate student, but with little understanding. I am embarrassed to say that I did not know a single person (student or faculty member) at Berkeley at the time (late 1980s) who was familiar with Hawking's arguments and who appreciated the deep implications of the results. This was true of most of theoretical physics -- despite the fact that even Hawking's popular book A Brief History of Time (1988) gives a simple version of the paradox. The importance of Hawking's observation only became clear to the broader community somewhat later, perhaps largely due to people like John Preskill and Lenny Susskind.

... This radiation coming from black holes that Hawking predicted is now, very appropriately, referred to as Hawking radiation. For any black hole that is expected to arise in normal astrophysical processes, however, the Hawking radiation would be exceedingly tiny, and certainly unobservable directly by any techniques known today. But he argued that very tiny black holes could have been produced in the big bang itself, and the Hawking radiation from such holes would build up into a final explosion that might be observed. There appears to be no evidence for such explosions, showing that the big bang was not so accommodating as Hawking wished, and this was a great disappointment to him.

These achievements were certainly important on the theoretical side. They established the theory of black-hole thermodynamics: by combining the procedures of quantum (field) theory with those of general relativity, Hawking established that it is necessary also to bring in a third subject, thermodynamics. They are generally regarded as Hawking’s greatest contributions. That they have deep implications for future theories of fundamental physics is undeniable, but the detailed nature of these implications is still a matter of much heated debate.

... He also provided reasons for suspecting that the very rules of quantum mechanics might need modification, a viewpoint that he seemed originally to favour. But later (unfortunately, in my own opinion) he came to a different view, and at the Dublin international conference on gravity in July 2004, he publicly announced a change of mind (thereby conceding a bet with the Caltech physicist John Preskill) concerning his originally predicted “information loss” inside black holes.

I have only two minor recollections to share about Hawking. The first, from my undergraduate days, is really more about Gell-Mann: Gell-Mann, Feynman, Hawking. The second is from a small meeting on the black hole information problem, at Institut Henri Poincare in Paris in 2008. (My slides.) At the conference dinner I helped to carry Hawking and his motorized chair -- very heavy! -- into a fancy Paris restaurant (which are not, by and large, handicapped accessible). Over dinner I met Hawking's engineer -- the man who maintained the chair and its computer voice / controller system. He traveled everywhere with Hawking's entourage and had many interesting stories to tell. For example, Hawking's computer system was quite antiquated but he refused to upgrade to something more advanced because he had grown used to it. The entourage required to keep Hawking going was rather large (nurses, engineer, driver, spouse), expensive, and, as you can imagine, had its own internal dramas.

Saturday, March 10, 2018

Risk, Uncertainty, and Heuristics

Risk = space of outcomes and probabilities are known. Uncertainty = probabilities not known, and even space of possibilities may not be known. Heuristic rules are contrasted with algorithms like maximization of expected utility.

See also Bounded Cognition and Risk, Ambiguity, and Decision (Ellsberg).

Here's a well-known 2007 paper by Gigerenzer et al.

Helping Doctors and Patients Make Sense of Health Statistics

Gigerenzer G1, Gaissmaier W2, Kurz-Milcke E2, Schwartz LM3, Woloshin S3.

Many doctors, patients, journalists, and politicians alike do not understand what health statistics mean or draw wrong conclusions without noticing. Collective statistical illiteracy refers to the widespread inability to understand the meaning of numbers. For instance, many citizens are unaware that higher survival rates with cancer screening do not imply longer life, or that the statement that mammography screening reduces the risk of dying from breast cancer by 25% in fact means that 1 less woman out of 1,000 will die of the disease. We provide evidence that statistical illiteracy (a) is common to patients, journalists, and physicians; (b) is created by nontransparent framing of information that is sometimes an unintentional result of lack of understanding but can also be a result of intentional efforts to manipulate or persuade people; and (c) can have serious consequences for health. The causes of statistical illiteracy should not be attributed to cognitive biases alone, but to the emotional nature of the doctor-patient relationship and conflicts of interest in the healthcare system. The classic doctor-patient relation is based on (the physician's) paternalism and (the patient's) trust in authority, which make statistical literacy seem unnecessary; so does the traditional combination of determinism (physicians who seek causes, not chances) and the illusion of certainty (patients who seek certainty when there is none). We show that information pamphlets, Web sites, leaflets distributed to doctors by the pharmaceutical industry, and even medical journals often report evidence in nontransparent forms that suggest big benefits of featured interventions and small harms. Without understanding the numbers involved, the public is susceptible to political and commercial manipulation of their anxieties and hopes, which undermines the goals of informed consent and shared decision making. What can be done? We discuss the importance of teaching statistical thinking and transparent representations in primary and secondary education as well as in medical school. Yet this requires familiarizing children early on with the concept of probability and teaching statistical literacy as the art of solving real-world problems rather than applying formulas to toy problems about coins and dice. A major precondition for statistical literacy is transparent risk communication. We recommend using frequency statements instead of single-event probabilities, absolute risks instead of relative risks, mortality rates instead of survival rates, and natural frequencies instead of conditional probabilities. Psychological research on transparent visual and numerical forms of risk communication, as well as training of physicians in their use, is called for. Statistical literacy is a necessary precondition for an educated citizenship in a technological democracy. Understanding risks and asking critical questions can also shape the emotional climate in a society so that hopes and anxieties are no longer as easily manipulated from outside and citizens can develop a better-informed and more relaxed attitude toward their health.

Wednesday, March 07, 2018

The Ballad of Bedbug Eddie and the Golden Rule

This is a bedtime story I made up for my kids when they were small. See also Isabel and the dwarf king.

Once upon a time, there was a tiny bedbug named Eddie, who was no bigger than a sesame seed. Like all bedbugs, Eddie lived by eating the blood of humans. Every night he crawled out of the bedding and bit his sleeping victim.

One night a strange idea entered Eddie's mind. Are there little bugs that bite me when I sleep? he wondered. That would be terrible! (Little did Eddie know that there was a much smaller bug named Mini who lived in his left antenna, and who drank his blood! But that is another story...)

Suddenly, Eddie had an inspiration. It was wrong to bite other people and drink their blood. If I don't like it, he thought, I shouldn't do it to other people!

From that moment on, Eddie resolved to never bite another creature. He would have to find a source of food other than blood!

Eddie lay in his bedding nest and wondered what he would do next. He had never eaten any other kind of food. He realized that to survive, he would have to search out a new kind of meal.

When the sun came up, Eddie decided he should leave his bed in search of food. He wandered through the giant house, with its fuzzy carpeting and enormous potted plants. Finally he came upon the cool, smooth floor of the kitchen. Smelling something edible, he continued toward the breakfast table.

Soon enough, he encountered the biggest chunk of food he had ever seen. It was a hundred times bigger than Eddie, and smelled of peanut butter -- it was a crumb of toast! Then Eddie realized the entire floor under the table was covered with crumbs -- bread, cracker, muffin, even fruit and vegetable crumbs!

Eddie jumped onto the peanut butter toast crumb and started to eat. He was very hungry after missing his usual midnight meal. He ate until he was very full. It took some getting used to peanut butter -- not his usual blood meal! But he would manage.

Suddenly, a huge crumb fell from the sky and almost crushed Eddie. He barely managed to jump out of the way of the huge block of cereal, wet with milk. Looking up, he saw a giant figure on a chair, who was spraying crumbs all around as he gobbled up his breakfast.

The Crumb King! exclaimed Eddie. The Crumb King provides us with sustenance!

Hello Crumb King, shouted Eddie. Look out below! You almost crushed me with that cereal! he yelled.

Between crunches of cereal, Max heard a tiny voice from below. Surprised, he looked down at the small black dot, no bigger than a sesame seed. Are you a bug? he asked.

I am bedbug Eddie! responded Eddie. Don't crush me with crumbs! he shouted.

From that day on, Eddie and Max were great friends.

Eddie became a vegetarian and devoted his life to teaching the Golden Rule: "Do unto others as you would have them do unto you.” (Matthew 7:12)

Better to be Lucky than Good?

The arXiv paper below looks at stochastic dynamical models that can transform initial (e.g., Gaussian) talent distributions into power law outcomes (e.g., observed wealth distributions in modern societies). While the models themselves may not be entirely realistic, they illustrate the potentially large role of luck relative to ability in real life outcomes.

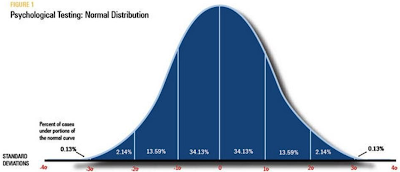

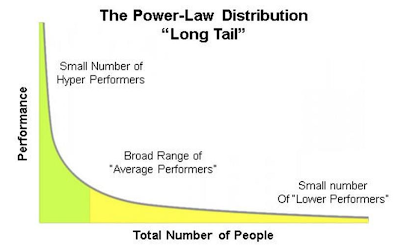

We're used to seeing correlations reported, often between variables that have been standardized so that both are normally distributed. I've written about this many times in the past: Success, Ability, and All That , Success vs Ability.

But wealth typically follows a power law distribution:

Of course, it might be the case that better measurements would uncover a power law distribution of individual talents. But it's far more plausible to me that random fluctuations + nonlinear amplifications transform, over time, normally distributed talents into power law outcomes.

We're used to seeing correlations reported, often between variables that have been standardized so that both are normally distributed. I've written about this many times in the past: Success, Ability, and All That , Success vs Ability.

But wealth typically follows a power law distribution:

Of course, it might be the case that better measurements would uncover a power law distribution of individual talents. But it's far more plausible to me that random fluctuations + nonlinear amplifications transform, over time, normally distributed talents into power law outcomes.

Talent vs Luck: the role of randomness in success and failureHere is a specific example of random fluctuations and nonlinear amplification:

https://arxiv.org/pdf/1802.07068.pdf

The largely dominant meritocratic paradigm of highly competitive Western cultures is rooted on the belief that success is due mainly, if not exclusively, to personal qualities such as talent, intelligence, skills, smartness, efforts, willfulness, hard work or risk taking. Sometimes, we are willing to admit that a certain degree of luck could also play a role in achieving significant material success. But, as a matter of fact, it is rather common to underestimate the importance of external forces in individual successful stories. It is very well known that intelligence (or, more in general, talent and personal qualities) exhibits a Gaussian distribution among the population, whereas the distribution of wealth - often considered a proxy of success - follows typically a power law (Pareto law), with a large majority of poor people and a very small number of billionaires. Such a discrepancy between a Normal distribution of inputs, with a typical scale (the average talent or intelligence), and the scale invariant distribution of outputs, suggests that some hidden ingredient is at work behind the scenes. In this paper, with the help of a very simple agent-based toy model, we suggest that such an ingredient is just randomness. In particular, we show that, if it is true that some degree of talent is necessary to be successful in life, almost never the most talented people reach the highest peaks of success, being overtaken by mediocre but sensibly luckier individuals. As to our knowledge, this counterintuitive result - although implicitly suggested between the lines in a vast literature - is quantified here for the first time. It sheds new light on the effectiveness of assessing merit on the basis of the reached level of success and underlines the risks of distributing excessive honors or resources to people who, at the end of the day, could have been simply luckier than others. With the help of this model, several policy hypotheses are also addressed and compared to show the most efficient strategies for public funding of research in order to improve meritocracy, diversity and innovation.

Nonlinearity and Noisy Outcomes: ... The researchers placed a number of songs online and asked volunteers to rate them. One group rated them without seeing others' opinions. In a number of "worlds" the raters were allowed to see the opinions of others in their world. Unsurprisingly, the interactive worlds exhibited large fluctuations, in which songs judged as mediocre by isolated listeners rose on the basis of small initial fluctuations in their ratings (e.g., in a particular world, the first 10 raters may have all liked an otherwise mediocre song, and subsequent listeners were influenced by this, leading to a positive feedback loop).

It isn't hard to think of a number of other contexts where this effect plays out. Think of the careers of two otherwise identical competitors (e.g., in science, business, academia). The one who enjoys an intial positive fluctuation may be carried along far beyond their competitor, for no reason of superior merit. The effect also appears in competing technologies or brands or fashion trends.

If outcomes are so noisy, then successful prediction is more a matter of luck than skill. The successful predictor is not necessarily a better judge of intrinsic quality, since quality is swamped by random fluctuations that are amplified nonlinearly. This picture undermines the rationale for the high compensation awarded to certain CEOs, studio and recording executives, even portfolio managers. ...

Saturday, March 03, 2018

Big Tech compensation in 2018

I don't work in Big Tech so I don't know whether his numbers are realistic. If they are realistic, then I'd say careers in Big Tech (for someone with the ability to do high level software work) dominate all the other (risk-adjusted) options right now. This includes finance, startups, etc.

No wonder the cost of living in the bay area is starting to rival Manhattan!

Anyone care to comment?

Meanwhile, in the low-skill part of the economy:

The Economics of Ride-Hailing: Driver Revenue, Expenses and TaxesNote Uber disputes this result and claims the low hourly result is due in part to the researchers misinterpreting one of the survey questions. Uber's analysis puts the hourly compensation at ~$15.

MIT Center for Energy and Environmental Policy Research

We perform a detailed analysis of Uber and Lyft ride-hailing driver economics by pairing results from a survey of over 1100 drivers with detailed vehicle cost information. Results show that per hour worked, median profit from driving is $3.37/hour before taxes, and 74% of drivers earn less than the minimum wage in their state. 30% of drivers are actually losing money once vehicle expenses are included. On a per-mile basis, median gross driver revenue is $0.59/mile but vehicle operating expenses reduce real driver profit to a median of $0.29/mile. For tax purposes the $0.54/mile standard mileage deduction in 2016 means that nearly half of drivers can declare a loss on their taxes. If drivers are fully able to capitalize on these losses for tax purposes, 73.5% of an estimated U.S. market $4.8B in annual ride-hailing driver profit is untaxed.

How NSA Tracks You (Bill Binney)

Anyone who is paying attention knows that the Obama FBI/DOJ used massive government surveillance powers against the Trump team during and after the election. A FISA warrant on Carter Page (and Manafort and others?) was likely used to mine stored communications of other Trump team members. Hundreds of "mysterious" unmasking requests by Susan Rice, Samantha Powers, etc. were probably used to identify US individuals captured in this data.

I think it's entirely possible that Obama et al. thought they were doing the right (moral, patriotic) thing -- they really thought that Trump might be colluding with the Russians. But as a civil libertarian and rule of law kind of guy I want to see it all come to light. I have been against this kind of thing since GWB was president -- see this post from 2005!

My guess is that NSA is intercepting and storing big chunks of, perhaps almost all, US email traffic. They're getting almost all metadata from email and phone traffic, possibly much of the actual voice traffic converted to text using voice recognition. This used to be searchable only by a limited number of NSA people (although that number grew a lot over the years; see 2013 article and LOVEINT below), but now available to many different "intel" agencies in the government thanks to Obama.

Situation in 2013: https://www.npr.org/templates/story/story.php?storyId=207195207

(Note Title 1 FISA warrant grants capability to look at all associates of target... like the whole Trump team.)

Obama changes in 2016: https://www.nytimes.com/2016/02/26/us/politics/obama-administration-set-to-expand-sharing-of-data-that-nsa-intercepts.html

NYT: "The new system would permit analysts at other intelligence agencies to obtain direct access to raw information from the N.S.A.’s surveillance to evaluate for themselves. If they pull out phone calls or email to use for their own agency’s work, they would apply the privacy protections masking innocent Americans’ information... ” HA HA HA I guess that's what all the UNmasking was about...More on NSA capabilities: https://en.wikipedia.org/wiki/LOVEINT (think how broad their coverage has to be for spooks to be able to spy on their wife or girlfriend)

See also FISA, EO 12333, Bulk Collection, and All That.

Wikipedia: William Edward Binney[3] is a former highly placed intelligence official with the United States National Security Agency (NSA)[4] turned whistleblower who resigned on October 31, 2001, after more than 30 years with the agency.From the transcript of Binney's talk:

He was a high-profile critic of his former employers during the George W. Bush administration, and later criticized the NSA's data collection policies during the Barack Obama administration.

07:452016 FISC reprimand of Obama administration. The court learned in October 2016 that analysts at the National Security Agency were conducting prohibited database searches “with much greater frequency than had previously been disclosed to the court.” The forbidden queries were searches of Upstream Data using US-person identifiers. The report makes clear that as of early 2017 NSA Inspector General did not even have a good handle on all the ways that improper queries could be made to the system. (Imagine Snowden-like sys admins with a variety of tools that can be used to access raw data.) Proposed remedies to the situation circa-2016/17 do not inspire confidence (please read the FISC document).

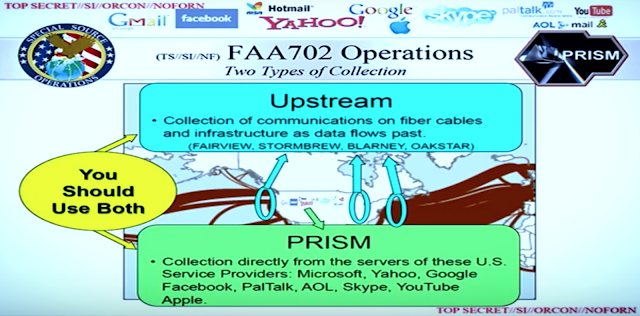

ways that they basically collect data

07:48

first it's they use the corporations

07:50

that run the fiber-optic lines and they

07:53

get them to allow them to put taps on

07:55

them and I'll show you some of the taps

07:57

where they are and and if that doesn't

07:59

work they use the foreign government to

08:00

go at their own telecommunications

08:02

companies to do the similar thing and if

08:04

that doesn't work they'll tap the line

08:06

anywhere they can get to it and they

08:08

won't even know it you know the

08:09

government's know that communications

08:11

companies will even though they're

08:12

tapped so that's how they get into it

08:14

then I get into fiber lines and this is

08:17

this is a the prism program ...

that was published

08:30

out of the Snowden material and they've

08:32

all focused on prism well prism is

08:36

really the the minor program I mean the

08:40

major program is upstream that's where

08:42

they have the fiber-optic taps on

08:43

hundreds of places around in the world

08:45

that's where they're collecting off the

08:47

fiber lined all the data and storing it

Subscribe to:

Posts (Atom)

Blog Archive

-

▼

2018

(128)

-

▼

03

(11)

- Outlier selection via noisy genomic predictors

- Public Troubled by Deep State (Monmouth Poll)

- Genetics and Group Differences: David Reich (Harva...

- The Automated Physicist: Experimental Particle Phy...

- The Face of the Deep State: John Brennan perjury

- Stephen Hawking (1942-2018)

- Risk, Uncertainty, and Heuristics

- The Ballad of Bedbug Eddie and the Golden Rule

- Better to be Lucky than Good?

- Big Tech compensation in 2018

- How NSA Tracks You (Bill Binney)

-

▼

03

(11)

Labels

- physics (420)

- genetics (325)

- globalization (301)

- genomics (295)

- technology (282)

- brainpower (280)

- finance (275)

- american society (261)

- China (249)

- innovation (231)

- ai (206)

- economics (202)

- psychometrics (190)

- science (172)

- psychology (169)

- machine learning (166)

- biology (163)

- photos (162)

- genetic engineering (150)

- universities (150)

- travel (144)

- podcasts (143)

- higher education (141)

- startups (139)

- human capital (127)

- geopolitics (124)

- credit crisis (115)

- political correctness (108)

- iq (107)

- quantum mechanics (107)

- cognitive science (103)

- autobiographical (97)

- politics (93)

- careers (90)

- bounded rationality (88)

- social science (86)

- history of science (85)

- realpolitik (85)

- statistics (83)

- elitism (81)

- talks (80)

- evolution (79)

- credit crunch (78)

- biotech (76)

- genius (76)

- gilded age (73)

- income inequality (73)

- caltech (68)

- books (64)

- academia (62)

- history (61)

- intellectual history (61)

- MSU (60)

- sci fi (60)

- harvard (58)

- silicon valley (58)

- mma (57)

- mathematics (55)

- education (53)

- video (52)

- kids (51)

- bgi (48)

- black holes (48)

- cdo (45)

- derivatives (43)

- neuroscience (43)

- affirmative action (42)

- behavioral economics (42)

- economic history (42)

- literature (42)

- nuclear weapons (42)

- computing (41)

- jiujitsu (41)

- physical training (40)

- film (39)

- many worlds (39)

- quantum field theory (39)

- expert prediction (37)

- ufc (37)

- bjj (36)

- bubbles (36)

- mortgages (36)

- google (35)

- race relations (35)

- hedge funds (34)

- security (34)

- von Neumann (34)

- meritocracy (31)

- feynman (30)

- quants (30)

- taiwan (30)

- efficient markets (29)

- foo camp (29)

- movies (29)

- sports (29)

- music (28)

- singularity (27)

- entrepreneurs (26)

- conferences (25)

- housing (25)

- obama (25)

- subprime (25)

- venture capital (25)

- berkeley (24)

- epidemics (24)

- war (24)

- wall street (23)

- athletics (22)

- russia (22)

- ultimate fighting (22)

- cds (20)

- internet (20)

- new yorker (20)

- blogging (19)

- japan (19)

- scifoo (19)

- christmas (18)

- dna (18)

- gender (18)

- goldman sachs (18)

- university of oregon (18)

- cold war (17)

- cryptography (17)

- freeman dyson (17)

- smpy (17)

- treasury bailout (17)

- algorithms (16)

- autism (16)

- personality (16)

- privacy (16)

- Fermi problems (15)

- cosmology (15)

- happiness (15)

- height (15)

- india (15)

- oppenheimer (15)

- probability (15)

- social networks (15)

- wwii (15)

- fitness (14)

- government (14)

- les grandes ecoles (14)

- neanderthals (14)

- quantum computers (14)

- blade runner (13)

- chess (13)

- hedonic treadmill (13)

- nsa (13)

- philosophy of mind (13)

- research (13)

- aspergers (12)

- climate change (12)

- harvard society of fellows (12)

- malcolm gladwell (12)

- net worth (12)

- nobel prize (12)

- pseudoscience (12)

- Einstein (11)

- art (11)

- democracy (11)

- entropy (11)

- geeks (11)

- string theory (11)

- television (11)

- Go (10)

- ability (10)

- complexity (10)

- dating (10)

- energy (10)

- football (10)

- france (10)

- italy (10)

- mutants (10)

- nerds (10)

- olympics (10)

- pop culture (10)

- crossfit (9)

- encryption (9)

- eugene (9)

- flynn effect (9)

- james salter (9)

- simulation (9)

- tail risk (9)

- turing test (9)

- alan turing (8)

- alpha (8)

- ashkenazim (8)

- data mining (8)

- determinism (8)

- environmentalism (8)

- games (8)

- keynes (8)

- manhattan (8)

- new york times (8)

- pca (8)

- philip k. dick (8)

- qcd (8)

- real estate (8)

- robot genius (8)

- success (8)

- usain bolt (8)

- Iran (7)

- aig (7)

- basketball (7)

- free will (7)

- fx (7)

- game theory (7)

- hugh everett (7)

- inequality (7)

- information theory (7)

- iraq war (7)

- markets (7)

- paris (7)

- patents (7)

- poker (7)

- teaching (7)

- vietnam war (7)

- volatility (7)

- anthropic principle (6)

- bayes (6)

- class (6)

- drones (6)

- econtalk (6)

- empire (6)

- global warming (6)

- godel (6)

- intellectual property (6)

- nassim taleb (6)

- noam chomsky (6)

- prostitution (6)

- rationality (6)

- academia sinica (5)

- bobby fischer (5)

- demographics (5)

- fake alpha (5)

- kasparov (5)

- luck (5)

- nonlinearity (5)

- perimeter institute (5)

- renaissance technologies (5)

- sad but true (5)

- software development (5)

- solar energy (5)

- warren buffet (5)

- 100m (4)

- Poincare (4)

- assortative mating (4)

- bill gates (4)

- borges (4)

- cambridge uk (4)

- censorship (4)

- charles darwin (4)

- computers (4)

- creativity (4)

- hormones (4)

- humor (4)

- judo (4)

- kerviel (4)

- microsoft (4)

- mixed martial arts (4)

- monsters (4)

- moore's law (4)

- soros (4)

- supercomputers (4)

- trento (4)

- 200m (3)

- babies (3)

- brain drain (3)

- charlie munger (3)

- cheng ting hsu (3)

- chet baker (3)

- correlation (3)

- ecosystems (3)

- equity risk premium (3)

- facebook (3)

- fannie (3)

- feminism (3)

- fst (3)

- intellectual ventures (3)

- jim simons (3)

- language (3)

- lee kwan yew (3)

- lewontin fallacy (3)

- lhc (3)

- magic (3)

- michael lewis (3)

- mit (3)

- nathan myhrvold (3)

- neal stephenson (3)

- olympiads (3)

- path integrals (3)

- risk preference (3)

- search (3)

- sec (3)

- sivs (3)

- society generale (3)

- systemic risk (3)

- thailand (3)

- twitter (3)

- alibaba (2)

- bear stearns (2)

- bruce springsteen (2)

- charles babbage (2)

- cloning (2)

- david mamet (2)

- digital books (2)

- donald mackenzie (2)

- drugs (2)

- dune (2)

- exchange rates (2)

- frauds (2)

- freddie (2)

- gaussian copula (2)

- heinlein (2)

- industrial revolution (2)

- james watson (2)

- ltcm (2)

- mating (2)

- mba (2)

- mccain (2)

- monkeys (2)

- national character (2)

- nicholas metropolis (2)

- no holds barred (2)

- offices (2)

- oligarchs (2)

- palin (2)

- population structure (2)

- prisoner's dilemma (2)

- singapore (2)

- skidelsky (2)

- socgen (2)

- sprints (2)

- star wars (2)

- ussr (2)

- variance (2)

- virtual reality (2)

- war nerd (2)

- abx (1)

- anathem (1)

- andrew lo (1)

- antikythera mechanism (1)

- athens (1)

- atlas shrugged (1)

- ayn rand (1)

- bay area (1)

- beats (1)

- book search (1)

- bunnie huang (1)

- car dealers (1)

- carlos slim (1)

- catastrophe bonds (1)

- cdos (1)

- ces 2008 (1)

- chance (1)

- children (1)

- cochran-harpending (1)

- cpi (1)

- david x. li (1)

- dick cavett (1)

- dolomites (1)

- eharmony (1)

- eliot spitzer (1)

- escorts (1)

- faces (1)

- fads (1)

- favorite posts (1)

- fiber optic cable (1)

- francis crick (1)

- gary brecher (1)

- gizmos (1)

- greece (1)

- greenspan (1)

- hypocrisy (1)

- igon value (1)

- iit (1)

- inflation (1)

- information asymmetry (1)

- iphone (1)

- jack kerouac (1)

- jaynes (1)

- jazz (1)

- jfk (1)

- john dolan (1)

- john kerry (1)

- john paulson (1)

- john searle (1)

- john tierney (1)

- jonathan littell (1)

- las vegas (1)

- lawyers (1)

- lehman auction (1)

- les bienveillantes (1)

- lowell wood (1)

- lse (1)

- machine (1)

- mcgeorge bundy (1)

- mexico (1)

- michael jackson (1)

- mickey rourke (1)

- migration (1)

- money:tech (1)

- myron scholes (1)

- netwon institute (1)

- networks (1)

- newton institute (1)

- nfl (1)

- oliver stone (1)

- phil gramm (1)

- philanthropy (1)

- philip greenspun (1)

- portfolio theory (1)

- power laws (1)

- pyschology (1)

- randomness (1)

- recession (1)

- sales (1)

- skype (1)

- standard deviation (1)

- starship troopers (1)

- students today (1)

- teleportation (1)

- tierney lab blog (1)

- tomonaga (1)

- tyler cowen (1)

- venice (1)

- violence (1)

- virtual meetings (1)

- wealth effect (1)